|

There aren't arguments here but rather a disclaimer. Leiter will sometimes use the language of "taking down" the PGR or of a "slave revolt" among philosophers at lower-ranked PGR schools (on reflection I'm not 100% sure on this but it seems on-brand). Honestly, I give roughly zero fucks if I ever work at a ranked institution. That's not a thing that will add to my quality of life. My big concern is that the PGR is being sold as a ranking system and a guide to choosing grad programs. Now that's true: it's a ranking of programs based on surveys of philosophers. But whether it's a good guide to choosing a grad program is an entirely different ball of wax. Go back to the brewery analogy: if your tastes are for Rainier and PBR, then you're not going to care about the latest microbrew out of Portland, OR. You might like it. Or not. But it's your tasting that determines the liking, not the preferences of experts. And to say that the tastes of the experts are normative is worrisome. The experts might like the microbrew but it's not the case that you're failing if you don't.

Anyhow, I'm not looking to replace or "take down" the PGR. I still have to wash the dishes no matter whether the PGR implodes tomorrow or becomes mandatory reading for undergrads. My main concern is for us as a group of professionals to get a better sense of what the data are telling us.

0 Comments

Previously I argued that the PGR is not a good measure of likelihood of job placement. I came across this post from Leiter Reports which suggests that the PGR isn't just for placements simpliciter but good placements, where "good" means something like "a high-quality, PhD-granting institution." How do we determine if one good placement is better than another? By the hiring-institution's ranking on the PGR.

I don't find this argument -- that PGR is a good measure of good job placement and not just job placement -- terribly interesting. First, there are lots of reasons why someone wouldn't want to work at a high-PGR school. I like my Jesuit SLAC because we focus on teaching and I have a lot of support from the admin to try new ideas, both in teaching and research. And nobody is anal about the job: most of us pursue some kind of work-life balance and we have the support of our colleagues in pursuing it. Second, HAVE YOU SEEN THE GODDAMN JOB MARKET LATELY??? You might lovingly call it a "shit-show" but that's kind of an insult to shit-shows. My sample size is small and biased, but the jaw of every non-philosopher academic I've talked to drops when I tell them that it's normal to get 300-500 applications for an open/open position. While nobody wants a toxic work environment (which, I'm guessing occurs at all levels of professional philosophy), I think many folks consider themselves lucky to find a job in academia. So using the PGR as a way of predicting good job placements is out of step with lived realities of finding an academic job. The market has driven us from wanting good jobs to wanting merely jobs. We've got loans to pay and mouths to feed. That all brings me to today's subject: perhaps the PGR is good at predicting the quality of philosophy one hopes to do. On average, the suggestion goes, products of higher-ranking PGR programs do better philosophy than products at lower-ranking PGR programs. Now if you're thinking to yourself, "hey not-so-smart guy, you went to Fordham, which isn't very highly ranked, so clearly you've got an axe to grind!" lemme stop you right there: I have very little love for my alma mater for a wide variety of reasons. I have no desire whatsoever to budge Fordham's spot in the PGR. Back to the matter at hand. First, the biggest issue: if we're going to say that PGR ranking predicts quality of philosophy that is likely to be done by graduates, we need some metric of quality of philosophy. As far as I know, the only one offered is the same for smut: you know it when you see it. So let's run with this for a second. We know good philosophy when we see it. Presumably this means something like, "in reading certain kinds of philosophy, we experience X," where 'X' is short for a set of positive of thoughts and feelings. And I'm sure we've all had this experience. Reading William James got me into philosophy in the first place, and I've gotten that feeling reading (in no particular order) Aristotle, Wittgenstein, Andy Clark, Susan Stebbing, Alva Noe, Jenny Saul, Alvin Goldman, Mary Midgley and Richard Menary, among many others. The PGR, then, predicts the likelihood that graduates of more highly-ranked programs will write material that enables you to experience X than graduates of lower-ranked programs, provided your tastes are like those of the raters. On this view, the PGR works kind of like a ranking of vineyards or (what I'm more familiar with) breweries. Beer-ranking experts might say that products of brewery A are superior to products of brewery B. On this view, the best way to think about the PGR is as a taste-guide for consumers of philosophy: experts agree that the philosophy of mind coming out of NYU's grads is superior to that coming out of Stanford's grads. Fortunately, there's a relatively easy way to see if the PGR makes good predictions on this score. (Relative in principle, at least; we don't need anything like Twin Earth or LaPlace's Demon.) And this is a study that the APA should definitely fund. Pick some number of grads from schools at every tier of the PGR. (I think you could do this with the general ranking but it might work better with the specialties.) Commission them to write a short (~3k) paper of their choosing, but prepare it like they would peer-review: absolutely no identifying information. (In exchange, perhaps these papers could come out in a special issue of the Journal of the APA, or somehow of other compensate authors for their time.) Then, give these papers to other philosophers and have them guess the tier of school the author comes from. We can instruct raters that they are to pay attention to the "you know it when you see it" criterion for quality philosophy, since that's what we agreed to at the start of this investigation. If it turns out that raters are pretty accurate, then we might regard the PGR as a reliable guide to the quality of philosophy their grads produce. Now we began this by suggesting that "good philosophy" produces a kind of feeling. But what if that's the wrong metric? Maybe we rank "good philosophy" by some weighting of publications, citations, awards, grants, whatever. On this suggestion, higher-ranked PGR programs produce grads who produce more papers at higher-ranked venues that are more-often cited (and so on) and also win more grants than lower-ranked PGR programs. I think I have some beef with that definition of "good philosophy" but let's run with it for now. That's a (relatively) simple task that can be done by gathering publicly available data. (At least, I think most of it is publicly available.) So then we just need someone with the patience, financial support, and data analysis chops to gather publication info, citation rates, and grant-winner info (prob from NEH, NSF, and Templeton among others). These can be given weights, or a range of weights, and then we can see if the PGR makes good predictions. Either way, the key is to remember: 1. the PGR is a survey expressing preferences, 2. if the survey data is to be useful, it has to make predictions about grads, and 3. these predictions are testable. You can use Web of Knowledge to get a handle on the most important papers in a field you're just starting to dabble in (or discover important papers that might have slipped under your radar). From the last post we saw how to search Web of Knowledge. After you land on the page returning the citations for your query, sort by "Times Cited." (Sorting options are above your returned citations.) You'll see a little arrow next to "Times Cited" -- down means most-to-least cited (selecting "Times Cited" again reorders from least-to-most). Voila! You have a list of citations from most to least cited. Of course, there are limitations:

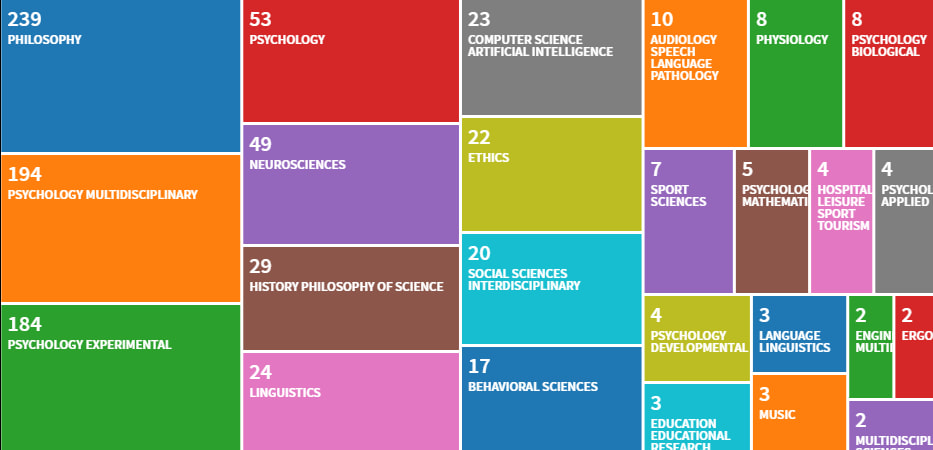

1. This does not include books. The citations from your query are only what's logged in Web of Science. Admittedly, this is more journals than you can shake a stick at (and most of which you've probably never heard of), but just know that books aren't included. 2. Any journals that aren't logged in Web of Science aren't included. My hunch is that journals that aren't logged are super obscure and likely aren't the best place to get up to speed on essential papers within your subfield. I told my friend Joe Vukov about using Web of Knowledge to figure out which journals are publishing in which areas. (E.g. which journal has been publishing the greatest volume of work on the epistemology of disagreement or on Stoic ethics.) He said it was a great idea to share with grad students, so I'm providing a more fine-grained account of the steps to discover who's publishing in what topics. If you happen to be reading this a year (or more) after I've written it, then the number of returns you get may be different from mine. So take the numbers here as indicating a snapshot of research volume on 4E cognition at a particular moment in time. The instructions here suppose that you're following along at home. I suppose I could have used screenshots but I have two monitors it's kind of a pain in the tuckus to get all and only what I want, hence the egocentric directions. Does your school have a subscription? I found mine by calling up the library, and they told me to look 'databases' tab on the library webpage. Lo and behold, there it was. Create a login and username for yourself. I prefer to use the advanced search since it has more tools at your disposal but I got started by playing with the basic search function. In a nutshell: you put in your search parameters, and the site finds every citation fitting those parameters since 1965. Not up to speed on your Boolean operators? They have a tutorial. (You find it by going to 'advanced search.' You'll see a few links telling you where to go.) I go a little overboard with the parentheses only because I can never remember what operators take priority and I'm too lazy to look it up every time. I wanted to find out where folks have been publishing on 4E cognition in the last 3 years. So I used the following search term: TS=("extended mind*" OR "extended cognition" OR "enactive mind*" OR "enactive cognition" OR "enactivism" OR "embodied mind*" OR "embodied cognition" OR "embedded mind*" OR "situated mind*" OR "embedded cognition" OR "situated cognition") 'TS' is topic; the asterisks capture any string with at least the part before the asterisks -- so the initial string plus anything else attached to it. E.g. 'mind*' will catch both 'mind' and 'minds'. This returned 3,828 results. If you select, under the left-hand menu at "Web of Science Categories", you'll see that it covers psych and philosophy, but also literature, religion, management, sports science, and a bunch of other things. I only want the psych (the 1st, 2nd, and 5th in the list for me) and phil (3rd in the list) results, so I'll pick those and hit "refine". Now we're down to 2,035 citations. I also only want stuff published in the last 3 years, so I'll select what years I want under "Publication Years" in the left panel. This gets me 624 citation. First, can we appreciate that 624 items have been published on 4E cognition between January 2017 and December 2019 in philosophy and psychology alone? Holy shit. Ok, back to the task: go to "Analyze Results" (towards the top right) and you'll get a treemap of citations by topic. (I changed 'number of results' to 25 because I wanted the as many top results as possible, and 25 is the limit.) Here's what that looks like. What the hell? I refined for 'philosophy' and 'psychology' but 'sports sciences' still made it into the analysis! I can't find anything on it on the website, but I'm assuming that if a citation is tagged as both A and B, then refining for tag A means that tag B comes along too. So tags are excluded as long as they're never conjoined with the filtered-for tags but also if they're specifically excluded (which is another option when refining). That's all fine. It doesn't affect what we're doing here: we want to know which journals are publishing 4E work and that doesn't depend on whether Web of Science's categories are mutually exclusive or how the filters work at this level. It may be important if you want to do other analyses. Now to get journal titles, select "Source Titles" in the left-hand menu, and you'll get the following visualization. This tells me the top three journals publishing the greatest volume of 4E papers are Frontiers, PCS, and Synthese. Also, I had no idea that the Italian Journal of Cognitive Sciences has published more papers on 4E cognition than Cognitive Science. That'll teach me to pay attention to only English-language journals. (In case you're curious, it publishes papers in both English and Italian.) Keep in mind some limitations: 1. It doesn't tell me if the work is critical (or not) 2. There's no information about special issues, which could inflate the numbers 3. The numbers aren't relative to the total volume of work the journal publishes Nonetheless, the visualization gives me a good sense for editors who are less likely to give 4E papers a desk reject because they don't fit with the journal's recent trends. Neato, right? Now, suppose you want to expand your search for journals publishing 4E stuff since the 1960s. One thing to remember is that every time you refine your search, Web of Science counts it as a new search. So if you go to "Search History" you should be able to find your previous searches. I want to see historically who's been the greatest publisher of 4E work, so I select my search that refined for category but not year. Here's what that looks like: I think there are some neat observations here. Frontiers and PCS are still the top 2. Phil Psych moves from 5th to 3rd. Synthese goes from 3rd to 10th -- suggesting they got into the 4E game more recently. Same thing for Adaptive Behavior. And Analysis doesn't make the top 25, despite publishing Clark and Chalmer's 1998 paper "The Extended Mind"! One thing to keep in mind is that some journals in the list are generalist ones (Synthese, Cognitive Science) and others are specialists (Philosophical Psychology, PCS) so you'd expect specialists to have more 4E papers than generalist ones. Another thing to keep in mind here is when the journal was founded. Older journals have a leg up on newer ones in several ways, but Frontiers is pretty darned new (definitely newer than Synthese) soooo..... But enough of my weird interest in the shifting sands that is the culture of professional philosophy. We've got here an easy way to learn who's publishing what. Any questions or comments, please feel free to write!

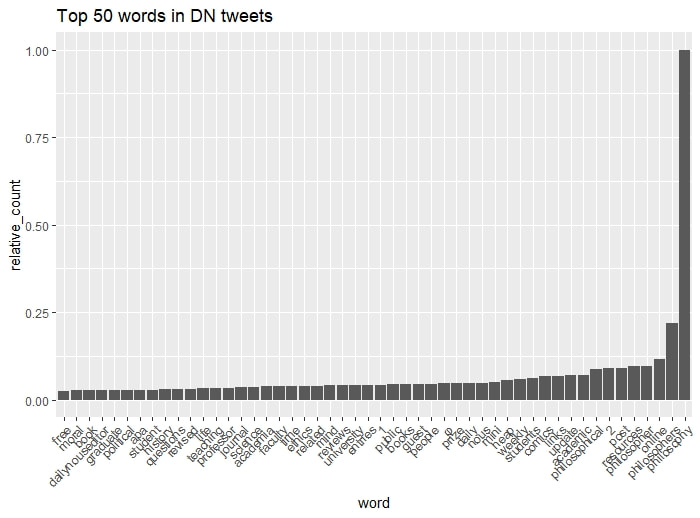

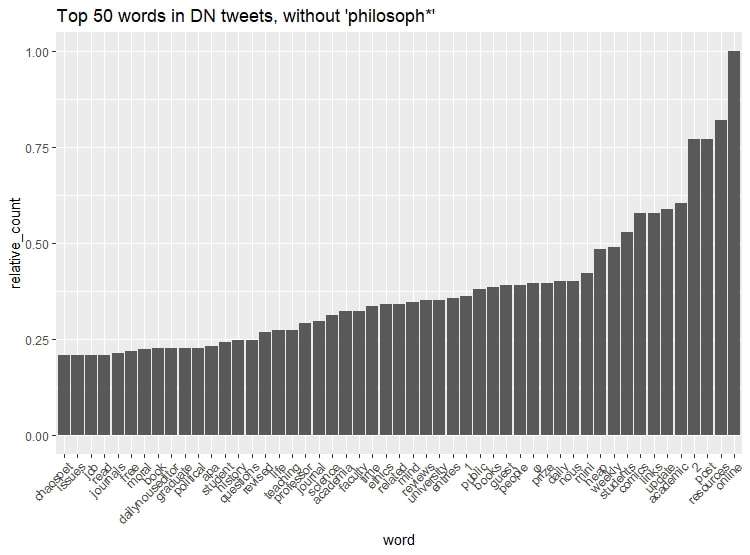

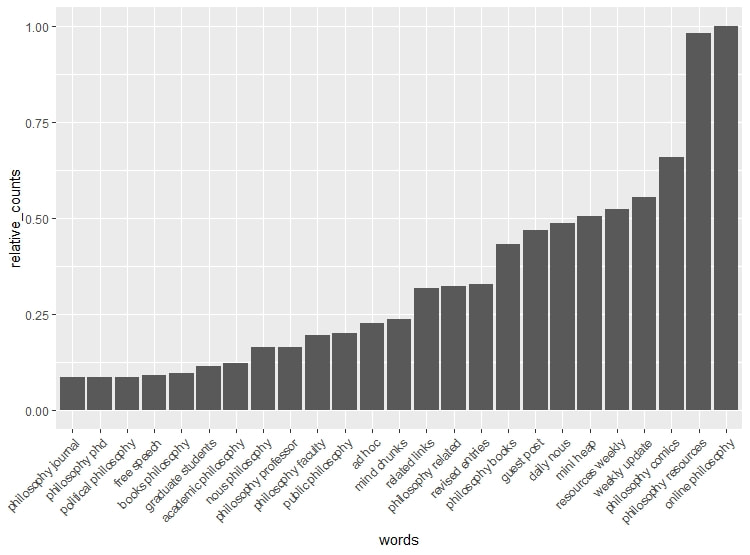

I've been thinking about the Philosophical Gourmet Report recently. When Leiter started it way back when, he says that it was to act as a guide for grad students, to make sure they didn't get suckered into attending a program that was subpar with the hopes of being well-positioned for a job. Since those early days, the PGR has grown substantially, both in content, participation, and influence. (Shit, it's published by Blackwell!) And Leiter is no longer the Grand Poobah; Berit Brogaard and Christopher Pynes are.

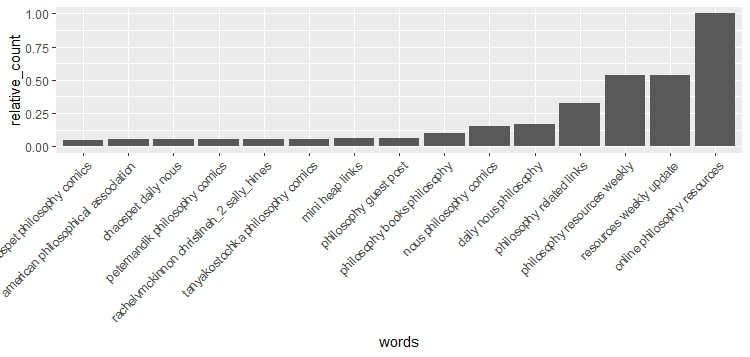

What does the PGR do? From the website, "This Report tries to capture existing professional sentiment about the quality and reputation of different Ph.D. programs as a whole and in specialty areas in the English-speaking world." That's all well and good, but what do we want with the captured professional sentiment? What do we do with info like, "Population P expresses a preference for Program A over Program B"? Here are two possibilities. (1) we use the info to make guesses about the likelihood of the professional success of graduates from a program, (2) we use the info to make guesses about the quality of philosophy being done at a program. (1) suggests that the PGR is helpful for students deciding where to go to increase their odds of getting a job. (2) suggests that the PGR is helpful for students deciding where to go to do really good philosophy. We'll do (1) today and (2) another day. (1) Measuring professional success First things first: what the heck is meant by "professional success"? Here are a few candidates: (a) getting job offers/positions, in particular tenure-track offers/positions (b) publishing (c) publishing and being cited ... or some combination of these. So one way to interpret the findings of the PGR is to say that program's being more highly ranked increase the likelihood that graduates from that program will experience greater professional success. In less Byzantine prose: program ranking positively correlates with professional success. The PGR makes this kind of a claim on its website: "These sentiments correlate fairly well with job placement of junior faculty in continuing positions in universities and colleges..." Here's my criticism: if what we're really interested in are correlations between programs and job placements, then surveying philosophers is an indirect and shitty measure. Why? We're asking philosophers to rank a program from 0 (inadequate) to 5 (distinguished) based on the department's current faculty. What philosophers think about a group of philosophers employed by the same department gives an impression of what philosophers think about that department, but not necessarily of whether that department does a good job at placing its students. The method delivers the opinions of philosophers about other departments, not whether departments do a good job of placing graduates. "Maybe we're not interested in placement. Maybe we want to rank departments for other reasons!" Cool cool cool cool cool. But given the living hell that is the philosophy job market, I don't think folks should be concerned about ranking departments if it predictions about grad placement aren't part of why we're ranking in the first place. If we're not interested in ranking for the purpose of helping people get jobs, then what the hell are we doing? Further, asking for opinions about a department is subject to a wide variety of cognitive biases, like the availability heuristic. "Ok smart guy, if this method is insufficient what's a better one?" I'm glad you asked. My answer isn't all that deep; but, if you want to know how well a department does placing its graduates, look at the department's placement rates. It's a direct measure of what we're interested in. So rather than ranking programs based on philosophers' opinions, rank programs based on their placement rates. "Ok smart guy, but it says on the PGR's website that grad students should use placement data when making their decision where to go to grad school." That's cool, but then what value does the PGR add? What can the PGR predict that placement rates can't (or can't predict as well)? One assumption of the methodology of the PGR is that philosophers have some accurate sense of program placement rates, but seems implausible. Do any of these folks know what Harvard's or Brown's or CUNY's or Villanova's placement rates were last year? Another assumption that the method needs is that the quality of the philosophers a program has is an indicator of how well they place students. Higher quality philosophers mean higher placement rates. But this, if true, isn't obvious at all. And it's not obvious how we would measure it to come up with evidence for it. We can get numbers for placement rates, sure, but what's our metric for quality philosophy? Surveys, probably. In that case we're left with looking for correlations between subjective measures of philosophical quality and placement rates. AT BEST this might give us evidence for whether impressions of philosophical quality correlate with placement rates. But then we're really looking at philosophers' impressions, not program quality, and their correlations with objective phenomena. That's all for now. Later I'll consider whether the PGR is good for getting a measure of the quality of philosophy being done at a program. But first, some other general gripes: 1. We get mean values, but why not median and mode? 2. What about standard deviation or other measures of variance? 3. Why aren't the raw data available? These are easily remedied gripes and I hope that the editors fix them soon! It's been a minute. I've had a lot on my plate the last month or so and haven't been able to do as much with scraping Twitter as I'd like. But I've got some time this morning, so I thought I would look at DN's Twitter feed content. I loaded the content from the most recent 3200 posts (again, Twitter API limits), did some cleaning, took out the stop words (e.g. "the", "a", etc.) did a count of the 50 most popular terms, and scaled them to the most popular term. So "philosophy" on the right side of the graph is the most-used term (with 1500 occurrences over the past 6k tweets) and the counts are scaled relative to that. "Philosophy", "philosopher", and "online" are the most common, which aren't too surprising for the Twitter account of an online philosophy news sight. I added "philosoph*" as a stopword. Now let's look at the top 50. One thing to note is that we don't have the sharp drop-off like we saw in the previous graph. This suggests that DN tweets "philosophy" A LOT (again, unsurprising), but without the outlier we can see some more structure. "Online" takes the cake with the greatest number of occurrences. We see mentions of "online" and "resources" quite a bit now. "Students" comes in around 0.50, "academia" and "faculty" around 0.30, and "apa" and "graduate" at a little under 0.25. What to conclude from the data? If you felt that the DN Twitter account was largely about sex & gender or diversity or any other New Infantilism issues in particular, you'd be mistaken. It's largely about the profession in broad strokes (as well as some comics, like "chaospet" or "phi or not to phi". (btw "or", "not", and "to" are all stop-words and so not included in the counts. So every instance of "phi" in the comic's name "phi or not to phi" counts as two separate instances of "phi". So where you see it around 0.30, cut that in half and it'll give you a better picture of what the true value is. Now let's go from single words to bigrams. Bigrams are word pairs, so in the phrase "Waltz, bad nymph, for quick jigs vex" the bigrams are "waltz bad", "bad nymph", "nymph quick" ("for" is a stop word and hence omitted), etc. Here we'll just do the top 25 bigrams. This confirms what we saw above: DN's Twitter is largely about online philosophy resources aimed at the profession writ large. Folks who are looking for resources in the profession would do well, it would seem, to follow DN on Twitter. Finally, let's take a look at trigrams. I only did the top 25 because the text is unreadable otherwise. Yep, this confirms what we saw with the unigrams and bigrams. I have to confess that I thought the DN Twitter account was largely about sex and gender issues (i.e. the TERF wars) and chronically linking to folks fighting that battle. When looking at all the data, my suspicion was wrong. DN's Twitter, more often than not, talks about resources for and within the profession. I've been working on DN's Twitter feed for a few months and across several posts, but this is the end of it. Thanks if you've read along, and thanks especially to Justin Weinberg who agreed to let me look at and share these analyses. Any thoughts you'd like to share are warmly welcomed.

|

About me

I do mind and epistemology and have an irrational interest in data analysis and agent-based modeling. Old

|

RSS Feed

RSS Feed